OpenCV CUDA Performance Comparison (Nvidia vs Intel)

Introduction

In this post I am going to use the OpenCV’s performance tests to compare the CUDA and CPU implementations. The idea, is to get an indication of which OpenCV and/or Computer Vision algorithms, in general, benefit the most from GPU acceleration, and therefore, under what circumstances it might be a good idea to invest in a GPU.

Test setup

Software: OpenCV 3.4 compiled on Visual Studio 2017 with CUDA 9.1, Intel MKL with TBB, and TBB. To generate the CPU results I simply ran the CUDA performance tests with CUDA disabled, so that the fall back CPU functions were called, by changing the following

#define PERF_RUN_CUDA() :perf::GpuPerf::targetDevice()to

#define PERF_RUN_CUDA() falsein modules\ts\include\opencv2\ts\ts_perf.hpp.

The performance tests cover 104 of the OpenCV functions, with each function being tested for a number of different configurations (function arguments). The total number of different CUDA performance configurations/tests which run successfully are 6031, of which only 5300 configurations are supported by both the GPU and CPU.

Hardware: Four different hardware configurations were tested, consisting of 3 laptops and 1 desktop, the CPU/GPU combinations are listed below:

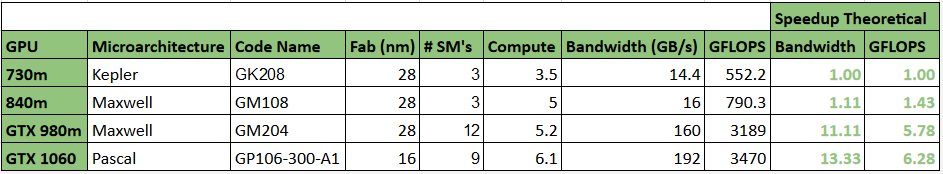

GPU specifications

The GPU’s tested comprise three different micro-architectures, ranging from a low end laptop (730m) to a mid range desktop (GTX 1060) GPU. The full specifications are shown below, where I have also included the maximum theoretical speedup, if the OpenCV function were bandwidth or compute limited. This value is just included to give an indication of what should be possible if architectural improvements, SM count etc. don’t have any impact on performance. In “general” most algorithms will be bandwidth limited implying that the average speed up of the OpenCV functions could be somewhere between these two values. If you are not familiar with this concept then I would recommend watching Memory Bandwidth Bootcamp: Best Practices, Memory Bandwidth Bootcamp: Beyond Best Practices and Memory Bandwidth Bootcamp: Collaborative Access Patterns by Tony Scudiero for a good overview.

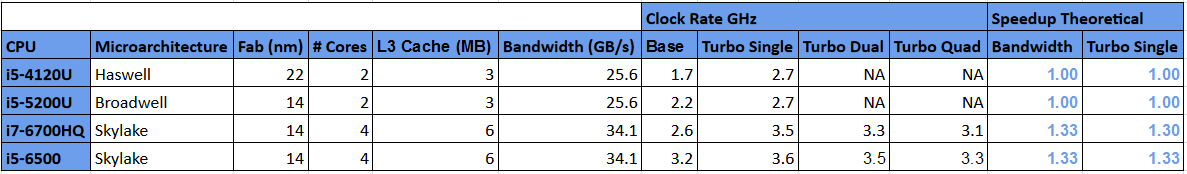

CPU specifications

The CPU’s tested also comprise three different micro-architectures, ranging from a low end laptop dual core (i5-4120U) to a mid range desktop quad core (i5-6500) CPU. The full specifications are shown below, where I have again included the maximum theoretical speedup depending on whether the OpenCV functions are limited by the CPU bandwidth or clock speed (I could not find any Intel published GFLOPS information).

Benchmark results

The results for all tests are available here, where you can check if a specific configuration benefits from an improvement in performance when moved to the GPU.

To get an overall picture of the performance increase which can be achieved from using the CUDA functions over the standard CPU ones, the speedup of each CPU/GPU over the least powerful CPU (i5_4210U), is compared. The below figure shows the speedup averaged over all 5300 tests (All Configs). Because the average speedup is influenced by the number of different configurations tested per OpenCV function, two additional measures are also shown (which only consider one configuration per function) on the below figure:- GPU Min - the average speedup, taken over all OpenCV functions for the configuration where the GPU speedup was smallest.

- GPU Max - the average speedup, taken over all OpenCV functions for the configuration where the GPU speedup was greatest.

The results demonstrate that the configuration (function arguments), makes a massive difference to the CPU/GPU performance. That said even the slowest configurations on the slowest GPU’s are in the same ball park, performance wise, as the fastest configurations on the most powerful CPU’s in the test. This combined with a higher average performance for all GPU’s tested, implies that you should nearly always see an improvement when moving to the GPU, if you have several OpenCV functions in your pipeline (as long as you don’t keep moving your data to and from the GPU), even if you are using a low end two generation old laptop GPU (730m).

Now lets examine some individual OpenCV functions. Because each function has many configurations, for each function the average execution time over all configurations tested, is used to calculate the speedup over the i5-4120U. This will provides a guide to the expected performance of a function irrespective of the specific configuration. The next figure shows the top 20 functions where the GPU speedup, was largest. It is worth noting that the speedup of the GTX 1060 over all of the CPU’s is so large that it has to be shown on a log scale.

Next, the bottom 20 functions where the GPU speedup, was smallest.

The above figure demonstrates that, although the CUDA implementations are on average much quicker, some functions are significantly quicker on the CPU. Generally this is due to the function using the Intel Integrated Performance Primitives for Image processing and Computer Vision (IPP-ICV) and/or SIMD instructions. That said the above results also show, that some of these slower functions, do benefit from the parallelism of the GPU, but a more powerful GPU is required to leverage this.

Finally lets examine which OpenCV functions took the longest. This is importanti f you are using one of these functions, as you may consider calling its CUDA counterpart, even if it is the only OpenCV function you need. The below figure contains the execution time for the 20 functions which took the longest on the i5-4120U, again this has to be shown on a log scale because the GPU execution time is much smaller than the CPU execution time.

Given the possible performance increases shown in the results, if you were performing mean shift filtering with OpenCV, on a laptop with only low end i5-4120U, the execution time of nearly 7 seconds may encourage you to upgrade your hardware. From the above it is clear that it is much better to invest in a tiny GPU (730m) which will reduce your processing time by a factor of 10 to a more tolerable 0.6 seconds, or a mid range GPU (GTX 1060), reducing your processing time by a factor of 100 to 0.07 seconds, rather than a mid range i7 which will give you less than a 30% reduction.

To conclude I would just reiterate that, the benefit you will get from moving your processing to the GPU with OpenCV will depend on the function you call and configuration that you use, in addition to your processing pipeline. That said from, what I have observed, on average the CUDA functions are much much quicker than their CPU counterparts. Please let me know if there are any mistakes in my results and/or analysis.